And finally, we will also see how to do group and aggregate on multiple columns. Pandas is a great python module that allows you to manipulate the dataframe or your dataset. There are many functions in it that efficiently do manipulation. There is a time where you need to divide two columns in pandas. In this entire tutorial, you will how to divide two columns in pandas using different methods. The agg() method allows us to specify multiple functions to apply to each column.

Below, I group by the sex column and then we'll apply multiple aggregate methods to the total_bill column. Inside the agg() method, I pass a dictionary and specify total_bill as the key and a list of aggregate methods as the value. Aggregation is a process in which we compute a summary statistic about each group. Aggregated function returns a single aggregated value for each group. After splitting a data into groups using groupby function, several aggregation operations can be performed on the grouped data.

Spark also supports advanced aggregations to do multiple aggregations for the same input record set via GROUPING SETS, CUBE, ROLLUP clauses. The grouping expressions and advanced aggregations can be mixed in the GROUP BY clause and nested in a GROUPING SETS clause. See more details in the Mixed/Nested Grouping Analytics section. When a FILTER clause is attached to an aggregate function, only the matching rows are passed to that function.

A pivot table is composed of counts, sums, or other aggregations derived from a table of data. You may have used this feature in spreadsheets, where you would choose the rows and columns to aggregate on, and the values for those rows and columns. It allows us to summarize data as grouped by different values, including values in categorical columns. Pandas comes with a whole host of sql-like aggregation functions you can apply when grouping on one or more columns. This is Python's closest equivalent to dplyr's group_by + summarise logic. Here's a quick example of how to group on one or multiple columns and summarise data with aggregation functions using Pandas.

One of the most basic analysis functions is grouping and aggregating data. In some cases, this level of analysis may be sufficient to answer business questions. In other instances, this activity might be the first step in a more complex data science analysis. In pandas, the groupbyfunction can be combined with one or more aggregation functions to quickly and easily summarize data.

This concept is deceptively simple and most new pandas users will understand this concept. However, they might be surprised at how useful complex aggregation functions can be for supporting sophisticated analysis. You can pass various types of syntax inside the argument for the agg() method. I chose a dictionary because that syntax will be helpful when we want to apply aggregate methods to multiple columns later on in this tutorial.

For example, the species.csv file that we've been working with is a lookup table. This table contains the genus, species and taxa code for 55 species. These species are identified in our survey data as well using the unique species code. Rather than adding 3 more columns for the genus, species and taxa to each of the 35,549 line Survey data table, we can maintain the shorter table with the species information.

When we want to access that information, we can create a query that joins the additional columns of information to the Survey data. This creates a dictionary for all columns in the dataframe. Therefore, we select the column we need from the "big" dictionary. One area that needs to be discussed is that there are multiple ways to call an aggregation function. As shown above, you may pass a list of functions to apply to one or more columns of data.

The most common aggregation functions are a simple average or summation of values. As of pandas 0.20, you may call an aggregation function on one or more columns of a DataFrame. The GROUP BY clause divides the rows returned from the SELECTstatement into groups. For each group, you can apply an aggregate function e.g.,SUM() to calculate the sum of items or COUNT()to get the number of items in the groups. For example, in our dataset, I want to group by the sex column and then across the total_bill column, find the mean bill size.

Instructions for aggregation are provided in the form of a python dictionary or list. The dictionary keys are used to specify the columns upon which you'd like to perform operations, and the dictionary values to specify the function to run. Specifies multiple levels of aggregations in a single statement. This clause is used to compute aggregations based on multiple grouping sets.

ROLLUP is a shorthand for GROUPING SETS. For example, GROUP BY warehouse, product WITH ROLLUP or GROUP BY ROLLUP is equivalent to GROUP BY GROUPING SETS(, , ()). GROUP BY ROLLUP(warehouse, product, ) is equivalent to GROUP BY GROUPING SETS(, , , ()). The N elements of a ROLLUP specification results in N+1 GROUPING SETS. The GROUP BY clause is often used with aggregate functions such as AVG(), COUNT(), MAX(), MIN() and SUM(). In this case, the aggregate function returns the summary information per group.

For example, given groups of products in several categories, the AVG() function returns the average price of products in each category. It is a versatile function to convert a Pandas dataframe or Series into a dictionary. In most use cases, Pandas' to_dict() function creates dictionary of dictionaries. It uses column names as keys and the column values as values. It creates a dictionary for column values using the index as keys. The pandas standard aggregation functions and pre-built functions from the python ecosystem will meet many of your analysis needs.

However, you will likely want to create your own custom aggregation functions. This article will quickly summarize the basic pandas aggregation functions and show examples of more complex custom aggregations. Whether you are a new or more experienced pandas user, I think you will learn a few things from this article. We can also group by multiple columns and apply an aggregate method on a different column.

Below I group by people's gender and day of the week and find the total sum of those groups' bills. Most examples in this tutorial involve using simple aggregate methods like calculating the mean, sum or a count. However, with group bys, we have flexibility to apply custom lambda functions. Notice that I have used different aggregation functions for different features by passing them in a dictionary with the corresponding operation to be performed. This allowed me to group and apply computations on nominal and numeric features simultaneously.

The preceding discussion focused on aggregation for the combine operation, but there are more options available. The GROUP BY statement is often used with aggregate functions (COUNT(),MAX(),MIN(), SUM(),AVG()) to group the result-set by one or more columns. The GROUP BY clause is used in a SELECT statement to group rows into a set of summary rows by values of columns or expressions. In this tutorial, we will learn how to convert two columns from dataframe into a dictionary. This is one of the common situations, we will first see the solution that I have used for a while using zip() function and dict(). Just recently, came across a function pandas to_dict() function.

Next, we will see two ways to use to_dict() functions to convert two columns into a dictionary. The tuple approach is limited by only being able to apply one aggregation at a time to a specific column. If I need to rename columns, then I will use the renamefunction after the aggregations are complete. In some specific instances, the list approach is a useful shortcut.

I will reiterate though, that I think the dictionary approach provides the most robust approach for the majority of situations. In the context of this article, an aggregation function is one which takes multiple individual values and returns a summary. In the majority of the cases, this summary is a single value. In this tutorial, you have learned you how to use the PostgreSQL GROUP BY clause to divide rows into groups and apply an aggregate function to each group.

Below, I group by the sex column and apply a lambda expression to the total_bill column. The expression is to find the range of total_bill values. The range is the maximum value subtracted by the minimum value. I also rename the single column returned on output so it's understandable. Similar to SQL GROUP BY clause, PySpark groupBy() function is used to collect the identical data into groups on DataFrame and perform aggregate functions on the grouped data. In this article, I will explain several groupBy() examples using PySpark .

We can apply a multiple functions at once by passing a list or dictionary of functions to do aggregation with, outputting a DataFrame. We can use Groupby function to split dataframe into groups and apply different operations on it. Aggregation i.e. computing statistical parameters for each group created example – mean, min, max, or sums.

Often you may want to group and aggregate by multiple columns of a pandas DataFrame. Fortunately this is easy to do using the pandas.groupby()and.agg()functions. When multiple statistics are calculated on columns, the resulting dataframe will have a multi-index set on the column axis. The multi-index can be difficult to work with, and I typically have to rename columns after a groupby operation. The output from a groupby and aggregation operation varies between Pandas Series and Pandas Dataframes, which can be confusing for new users. As a rule of thumb, if you calculate more than one column of results, your result will be a Dataframe.

For a single column of results, the agg function, by default, will produce a Series. It's simple to extend this to work with multiple grouping variables. Say you want to summarise player age by team AND position. You can do this by passing a list of column names to groupby instead of a single string value. Browse other questions tagged python pandas dataframe or ask your own question. The result of an inner join of survey_sub and species_sub is a new DataFrame that contains the combined set of columns from survey_sub and species_sub.

Itonly contains rows that have two-letter species codes that are the same in both the survey_sub and species_sub DataFrames. When we concatenated our DataFrames we simply added them to each other - stacking them either vertically or side by side. Another way to combine DataFrames is to use columns in each dataset that contain common values . Combining DataFrames using a common field is called "joining".

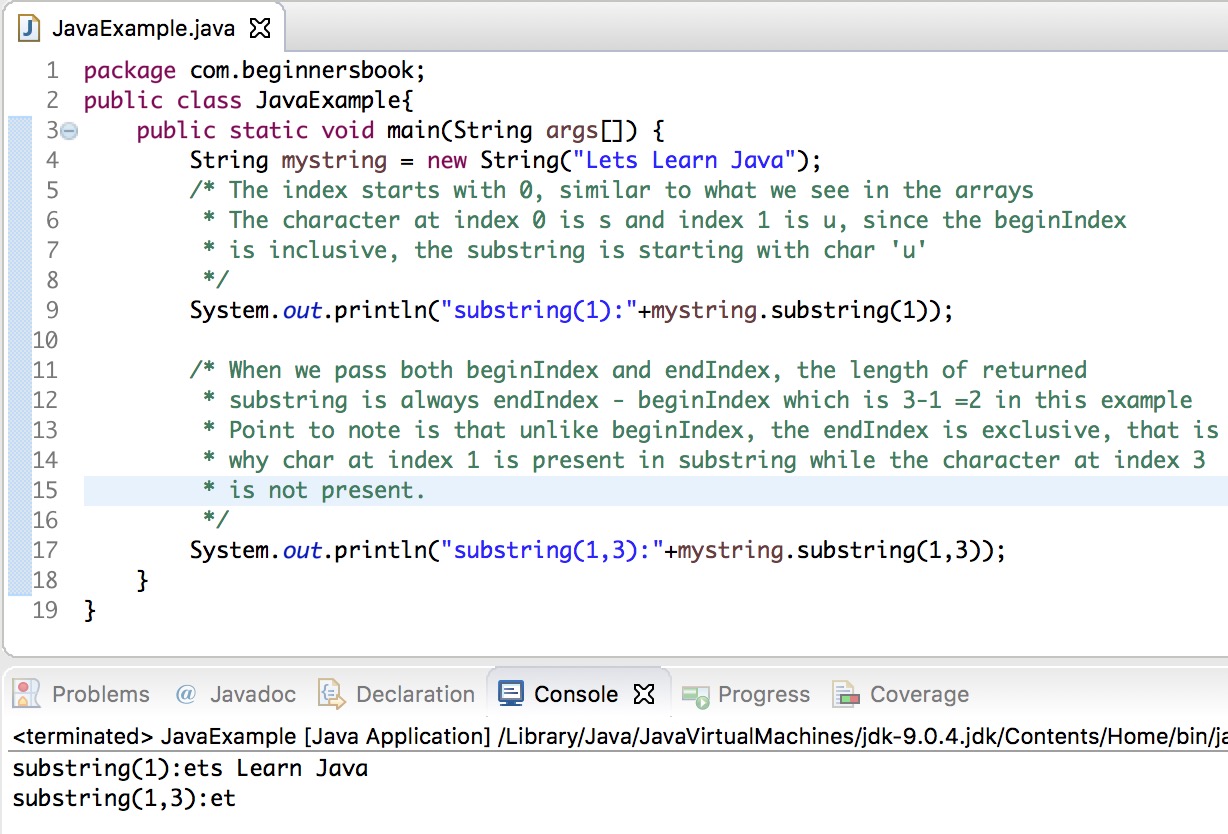

The columns containing the common values are called "join key". Joining DataFrames in this way is often useful when one DataFrame is a "lookup table" containing additional data that we want to include in the other. This clause is a shorthand for a UNION ALL where each leg of the UNION ALLoperator performs aggregation of each grouping set specified in the GROUPING SETS clause. Similarly, GROUP BY GROUPING SETS (, , ()) is semantically equivalent to the union of results of GROUP BY warehouse, product, GROUP BY productand global aggregate. In this section, you will know all the methods to divide two columns in pandas. Please note that I am implementing all the examples on Jupyter Notebook.

In this post, you will learn, by example, how to concatenate two columns in R. As you will see, we will use R's $ operator to select the columns we want to combine. First, you will learn what you need to have to follow the tutorial. Second, you will get a quick answer on how to merge two columns.

After this, you will learn a couple of examples using 1) paste() and 2) str_c() and, 3) unite(). In the final section, of this concatenating in R tutorial, you will learn which method I prefer and why. That is, you will get my opinion on why I like the unite() function. In the next section, you will learn about the requirements of this post. You can use DataFrame.apply() for concatenate multiple column values into a single column, with slightly less typing and more scalable when you want to join multiple columns .

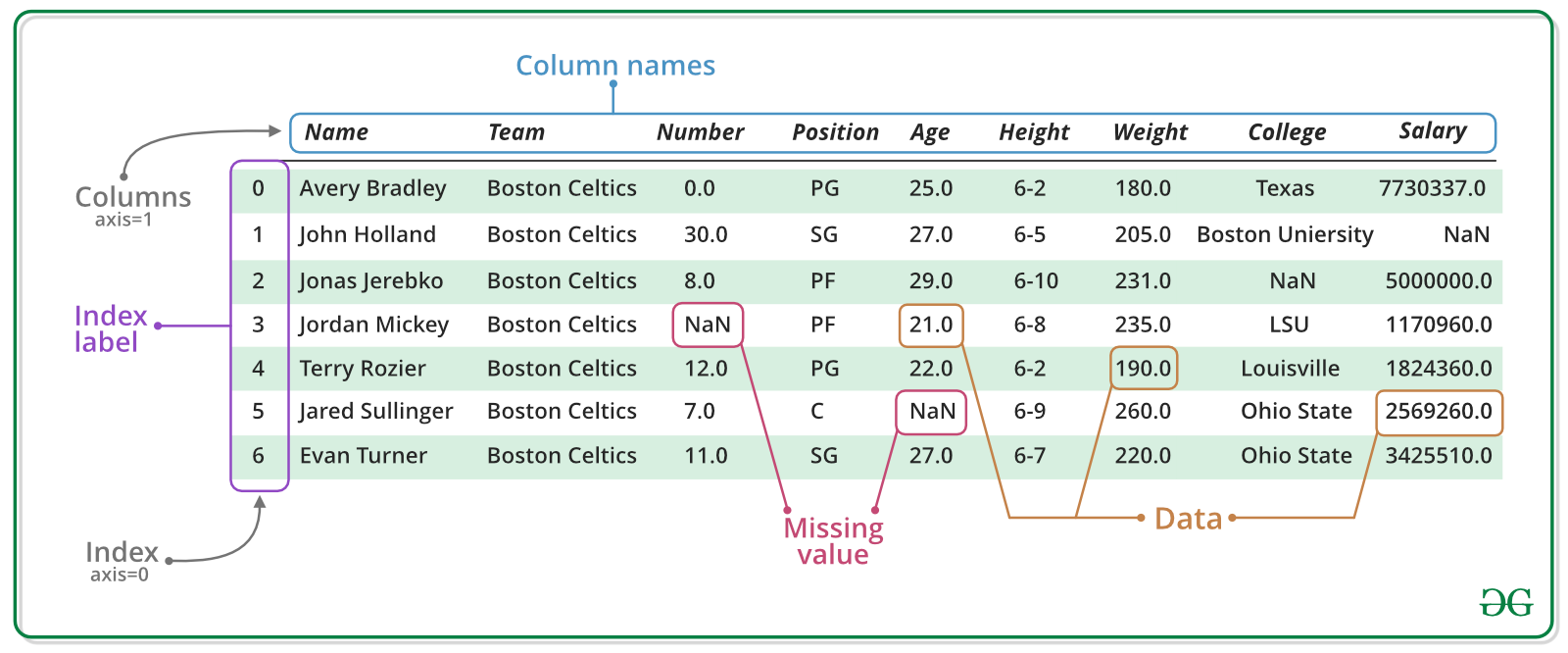

However, our purpose is slightly different, with one of the columns being keys for dictionary and the other column being values. To create a dictionary from two column values, we first create a Pandas series with the column for keys as index and the other column as values. And then we can apply Pandas' to_dict() function to get dictionary. The major distinction to keep in mind is that countwill not include NaNvalues whereas sizewill. Depending on the data set, this may or may not be a useful distinction. In addition, the nuniquefunction will exclude NaNvalues in the unique counts.

Keep reading for an example of how to include NaNin the unique value counts. You can use the GROUP BYclause without applying an aggregate function. The following query gets data from the payment table and groups the result by customer id.

For each group, you can apply an aggregate function such as MIN, MAX, SUM, COUNT, or AVG to provide more information about each group. Below, I use the agg() method to apply two different aggregate methods to two different columns. I group by the sex column and for the total_bill column, apply the max method, and for the tip column, apply the min method. For example, I want to know the count of meals served by people's gender for each day of the week.

So, call the groupby() method and set the by argument to a list of the columns we want to group by. With grouping of a single column, you can also apply the describe() method to a numerical column. Below, I group by the sex column, reference the total_bill column and apply the describe() method on its values. The describe method outputs many descriptive statistics.

Learn more about the describe() method on the official documentation page. Write a Pandas program to split a dataset to group by two columns and then sort the aggregated results within the groups. The apply() method lets you apply an arbitrary function to the group results. In the following examples, df.index // 5 returns a binary array which is used to determine what gets selected for the groupby operation. They are excluded from aggregate functions automatically in groupby.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.